Multi-Project Pipelines with GitLab-CE

Table of contents

This year at the DevOps Gathering 2019 conference at Bochum, Alexander and I met Peter Leitzen who is a backend engineer at GitLab and together we chatted about our on-premises GitLab-CE environment and how we are running GitLab Multi-Project Pipelines without GitLab-EE (GitLab Enterprise Edition). We promised him, that we will write a blog post about our setup but as often, it took some time until we were able to visualize and describe our setup - sorry! But now, here we go.

We will not share our concrete implementation here, as it makes no sense because everyone will have a different setup, different knowledge or is using another kind of programming language. Nevertheless we will describe what we are doing (the idea) and not how we are doing it - you can use whatever programming language you like to communicate with the GitLab API (because )it doesn’t matter).

Some background story

We have a lot of projects in our private GitLab and of course a lot of users, at the time of writing approximately 1500 projects with around 400 users, since we use GitLab since more than five years. Not only developers are using the GitLab, also colleagues who just want to version their configuration files and much more. With GitLab-EE it is possible to run multi-project pipelines - but this is a premium feature (Silver level) which costs $19 per GitLab user per month. Only some of our 400 GitLab enabled users need GitLab multi-project pipelines but sadly there is no way to just subscribe only some users to GitLab premium. 🙄

Back then we were sure (and it is still a fact today) that we will need multi-project pipelines for our new Docker Swarm environments (we started more than two years ago). Together with the developers we decide that we would like to have classic source-code Git repositories and deploy Git repositories for various reasons. Here are some reasons:

- The developers are using multiple applications stacks - we would not like to mix them up with deploy information

- It should be possible to setup the source code repositories including the needed

.gitlab-ci.ymlthrough a pipeline - pipelines are generating source code repositories with pre-configured settings to run pipelines - Developers should be able to push source code but not all developers should be able to run deploy pipelines implicitly - for example, external developers should be able to push code but they should not be able run deploy pipelines

You can image the latter as the marriage of a cars body and his chassis - after the pipeline run you will have the sum of the source code and the deploy repository - the container image.

The idea

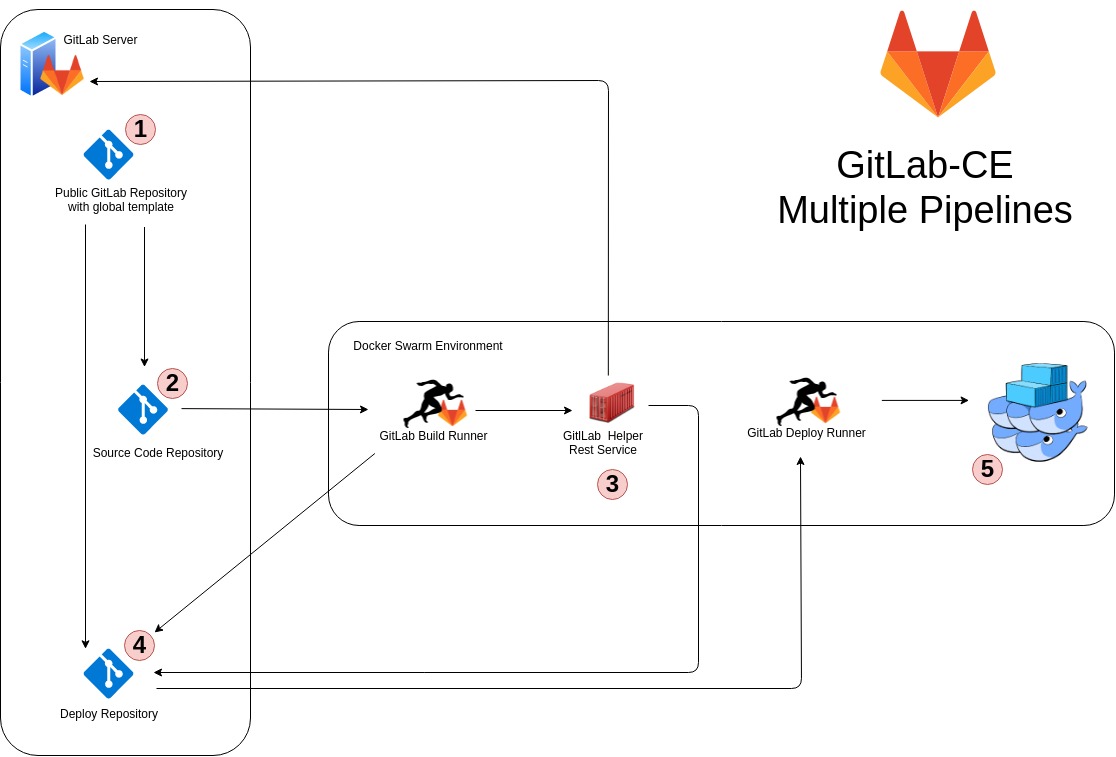

Let’s show the idea (click on the picture to the left). We have numbered the individual steps to explain them more in detail. Lets start with some additional information regarding the environment in general.

Overview

On the left side of the picture is our GitLab server installation which is responsible for all the repositories. We are fully committed to use a 100% GitOps approach since we started our container journey. Nothing happens to our applications within our Docker Swarm environments without a change in the corresponding Git repository. Later you will see that we have build some similarities to Kubernetes Helm but much simpler. 😂 Let’s deep dive into the details.

One

Point one shows a public(!) repository which we are using as source for the GitLab CI/CD templates. GitLab has introduced CI/CD templates with the release of GitLab v11.7 and we use them. Inside the templates Alex has build up a general structure for the different CI/CD stages. For example, the .gitlab-ci.yml file from one of our real world project just looks like this:

include: 'https://<our-gitlab-server>/public-gitops-ci-templates/gitops-ci-templates/raw/master/maven-api-template.yml'

image: <our-gitlab-registry>/devops-deploy/maven:3.6-jdk-12-se3

Yes, you see right! Thats all! Thats the whole .gitlab-ci.yml from a productive Git project. All the magic is centrally managed by public GitLab templates. In this case we look at a project of our developers. The nice thing about GitLab templates is, that values can be overwritten, like the image variable in this example! Simple but not complex - in any case it is possible to take a look in the template to know what is going on, because it is not hidden abstraction magic. By the way: there is absolutely no sensitive information in the templates! Everything that is project specific is managed by the affected project itself. Thats the link to point number two.

Two

As shown, the

.gitlab-ci.yml in the source code Git repository imports one of the global templates which are centrally maintained. And as said before, all relevant parameters for the templates where provided by the source code repository. There are a lot of secret variables there and therefore Alex made a special pipeline which bootstraps a new Git project if needed. This is not part of this post because this is very specific and it does depend on your needs. But it is important to know (and for the explanation), that during the creation of a new source code project, two GitLab project variables called SCI_DEPLOY_PRIVATE_KEY and SCI_DEPLOY_PRIVATE_KEY_ID are created and initialized.

Now, the GitLab CI/CD of the source repository is transmitted to the GitLab Runner and the job is started. The job itself uses a self made service which kicks in the GitLab Multi-Project Pipelines functionality. Therefore, we have to head over to number three - the self written GitLab Helper Rest Service.

Three (Part 1)

In the step before, the GitLab CI/CD pipeline of the source repository was triggered and at this point we imagine that the deploy job from the source repository is called. The deploy stage is part of the .gitlab-ci.yml template mentioned before. Now, in the template the following happens (this is the hardest part of all)

First of all, a SCI_JWT_TOKEN is generated to ensure a solid level of trust. The communication between the pipeline runner and our GitLab Helper Rest Service is TLS encrypted but we would like to be sure that only valid calls are processed. Take a look at the script line below. Here is the point, where the SCI_DEPLOY_PRIVATE_KEY comes back again. The little tool /usr/local/bin/ci-tools is part of the the Docker image that is used during the GitLab deploy pipeline run. It is self written it does nothing more than to generate and sign a JWT token. It is written in go - simple and efficient. The tool needs some important parameters

jobId=$CI_JOB_IDis the currently running pipeline. This information is used to verify that a currently running job is using the services of ourGitLab Helper Rest Service- not a old or dead one. This improves the trust once more.projectId=$CI_PROJECT_IDis the project id where this job is actual running.

In summary, we have a signed JWT token which includes the currently running job number of this specific project which is referenced by its ID.

...

.deploy-template: &deploy-template

script:

...

- export SCI_JWT_TOKEN=$(echo "$SCI_DEPLOY_PRIVATE_KEY" | /usr/local/bin/ci-tools crypto jwt --claims "jobId=$CI_JOB_ID" --claims "projectId=$CI_PROJECT_ID" --key-stdin)

...

After the SCI_JWT_TOKEN is generated, it is used to call the GitLab Helper Rest Service via curl (TLS encrypted). Please notice the deployKey REST method in the REST call. It is important to also see, that a variable called SCI_DOCKER_PROJECT_ID is used as REST parameter. The variable SCI_DOCKER_PROJECT_ID references the Docker Deploy project, this is number four in our overview! The GitLab Helper Rest Service now creates and enables a GitLab DeployKey if it isn’t already enabled there. That’s the trick to enable GitLab Multi-Project Piplines automatically! The GitLab Helper Rest Service verifies that the jobId=$CI_JOB_ID transmitted with the JWT token is valid by looking up the CI_JOB_ID via the GitLab API.

...

- curl -i -H "Authorization:Bearer $SCI_JWT_TOKEN" -H "Consumer-Token:$SCI_INIT_RESOURCE_REPO_TOKEN" -X POST "${SCI_GITLAB_SERVICE_URL}/deployKey/enable/${SCI_DOCKER_PROJECT_ID}/${SCI_DEPLOY_PRIVATE_KEY_ID}" --fail --silent --connect-timeout 600

...

Sadly the GitLab Helper Rest Service has to have administrative global permissions inside the GitLab installation to handle this tasks - thats the price to pay.

But we are not finished yet - here comes more cool stuff!

Three (Part 2)

Now, the source code repository pipeline has done the setup for the deploy pipeline. Based on the template variable configuration, the .gitlab-ci.yml for the deploy repository pipeline is automatically generated! You can imagine this as some kind of Kubernetes Helm, just for GitLab and simply integrated inside the GitLab repositories - GitOps! All and everything is stored inside the repositories! The last thing to do is, to push the generated config into the deploy repository. This is easy, because the GitLab deployKey was setup just before. 😇

And now some additional cool magic. Due to the commit into the deploy repository in the last step, the GitLab Helper Rest Service would be able to just run the pipeline which would be automatically created but then we would loose the information about who has trigger the pipeline. Therefore, an additional GitLab Helper Rest Service REST call is issued (see below). This one reads out the user who has created the jobId=$CI_JOB_ID in the source code repository. After this is done, the GitLab Helper Rest Service impersonates itself a exactly this user and creates the deploy pipeline in the deploy repository as this user and runs it 😎 - the deploy pipeline runs with the same user as the pipeline in the source code repository. Nice!

...

- curl -i -H "Authorization:Bearer $SCI_JWT_TOKEN" -H "Consumer-Token:$SCI_INIT_RESOURCE_REPO_TOKEN" -X POST "$SCI_GITLAB_SERVICE_URL/pipeline/create/$SCI_DOCKER_PROJECT_ID" --data "branch=$SCI_DOCKER_SERVICE_BRANCH_NAME" --fail

...

In addition, this is also a security feature because the user who runs the source code pipeline must also be a member of the deploy repository. Otherwise the pipeline cannot be created by the GitLab Helper Rest Service. This enables us to have developers who are able to push to the source code repository but are not able to run deploys - simply, they are not a member of the deploy repository.

Four

This is the deploy Git repository. It is used to run the deploys and it is part of our way to run GitLab Multi-Project Pipelines.

Five

The deploy pipeline takes the Docker Swarm config which is generated by the source code pipeline run and pushed to the deploy repository by our GitLab Helper Rest Service (like Kubernetes Helm) and updates the Docker Swarm Stack (and Docker Services)

Conclusion

This blog post gives you an idea how to create a Multi-Project Pipeline functionality with only GitLab-CE (on-premises). The idea enables you to have

- multi-project pipelines

- JWT signed tokens for information transport

- deploy pipelines who a triggered by the same user as the source code repository pipeline

The cost of this is, that you have to create an external service which will have administrative GitLab permissions. Warning: Do not write such a service if you are unfamiliar how to do it in a secure manner!

Hopefully GitLab will enable Multi-Project Pipelines also for GitLab-CE users for free in the future!

If you have questions or would like to say thank you, please contact us! If you like this blog post, please share it! Our bio-pages and contact information are linked below!

Alex, Mario